Some Models are Useful: Conceptual Probability, and Feeling Good About Randomness

Players are terrible with randomness.

I say that wholly and without equivocation because players are people, and let me tell you:

People

Are

Terrible

With

Randomness

This wouldn’t be a problem, except games often rely on randomness to generate their model. Because these two are one-and-the-same to the player experience, too often a player’s lack of understanding of probability manifests as a rejection of the model.

Models

A model (for those unaccustomed to the language) is a representation, a simulacrum, an imitate, of the world as players understand it. That world not necessarily being our world, but some consistent reality in which the game takes place. The world of the Magic Circle. While models are meant to represent this Magic Circle reality, they do not (cannot) replicate reality. No game in the world could fully replicate the intricate realities of an entire world, and even where we could, it’s often not cost-effective (or desirable) to do so. Instead, we model. Our choices in what to spend our time and energy representing well (with “high fidelity”) and what to disregard (or present with “low fidelity”) both define what our model communicates, and are defined by what we want the players to experience. As a consultant, we have a saying about this cost-effectiveness vs reality that quotes George Box regarding what he called “worrying selectively”:

“All models are wrong, but some models are useful.”

Faith in the Model

When players believe the model is not useful, they disengage from it. Given that my game design experience began with strategic decision-making games, designing for the Royal Australian Air Force, keeping my superiors engaged with my model was critical. A death knell for any tactical or operational game was to hear a senior officer say “okay, but that’s a sim-ism,” referring to a practice of simulation, divorced from reality. “It wouldn’t really work like that in the real world.” If I heard that, I knew the senior was disengaged, and I knew I’d lost the opportunity for my model to deliver whatever it was that I had programmed it to. This situation describes a loss of what I call “Faith in the Model”, a belief (not knowledge) that the system appropriately represents (not replicates) reality. In Serious Games, loss of faith in the model is a loss of belief that the lessons to be learned are relevant. In Recreational Games, loss of faith in the model is a loss of engagement, enjoyment, and the overall feeling of “fairness”. In any game where you’re asking a player to make decisions and experience consequences, on top of a fictional world, loss of faith in the model is explicitly loss of player from your game.

Feels Before Reals

I highlighted there that it’s a belief, not knowledge, and an overall feeling of “fairness”. This is something Sid Meier (of Civilisation series, MicroProse and Firaxis Games) calls the Winner Paradox:

”When you reward players for discovering a cool place on the map, here’s 100 gold, the player gladly accepts that, doesn’t question ‘did I earn that?’ Players are very much inclined to accept anything you give them, gladly, and feel it was their own clever play, their own incredible strategy, that earned them that cool reward.

On the other hand, if something bad happens to the player ‘your game is broken’, ‘there’s something horribly wrong’, ‘the game is cheating’.”

Fundamental to the rest of this post, this is not a discussion about what IS, it’s a discussion about how it FEELS.

Novel Digital Solutions

At first the solution was obvious, similar even to what we’ve used in other gameful approaches: Telegraphing. If we show the players the chances (if we help them to understand their decisions), and allow them to make informed, consequence-driven decisions, they will not (can not) have bad feelings about those consequences.

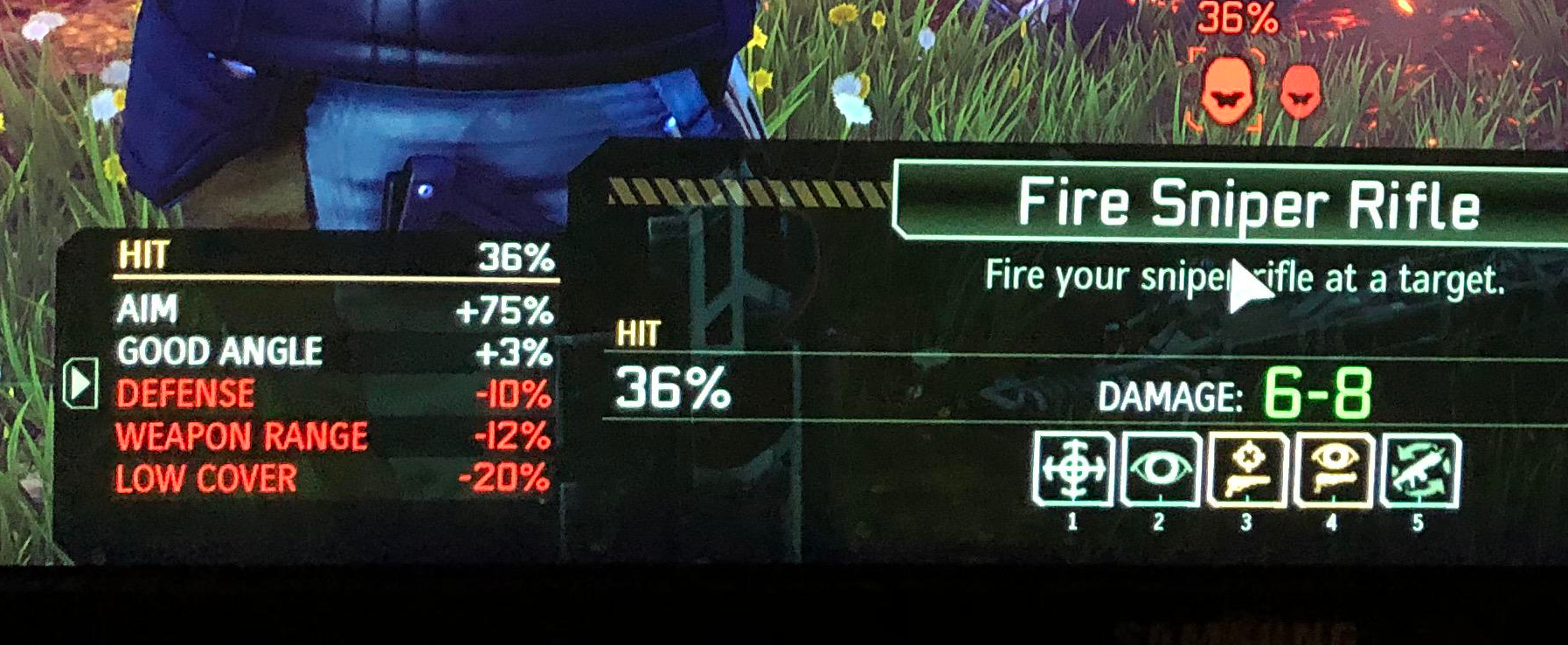

XCOM has become incredibly communicative about how gamestates influence player chances, and even they have to lie (Image from r/XCOM2. XCOM 2, 2016, Firaxis Games).

But unfortunately, our brains are broken in half when it comes to probability, both in how we understand and feel about those numbers being shown to us.

Players will see a 10% chance of success, and roll the dice (figuratively or literally), and, upon success, declare themselves tactical geniuses who were able to overcome probability. Players will see a 10% chance of failure, and roll the dice (figuratively or literally), and, upon failure, will declare the game the biggest piece of absolute dogshit.

“This game is absolute dogshit” - A player who has experienced a normal distribution curve.

But it goes deeper than just players creating a responsive Halo Effect over their play, it goes as deep as a fundamental misunderstanding of what these numbers mean. Sid Meier, again, talks about player psychology in competitive strategy clashes battle: “At a certain point, players feel like they’re going to win the battle. […] ‘Three! Three to one! Three is big, one is small, shouldn’t I have won!?’”

Jake Solomon, Lead designer for XCOM 2 (above) describes it thus: “Players may view that number not from a mathematical sense, but from an emotional sense,” says Solomon. “If you see an 85 percent chance to hit, you’re not looking at that as a 15 percent chance of missing. If you thought about it that way, it’s not an inconceivable chance you’re going to miss the shot. Instead, you see an 85 percent chance, and you think, 'That’s close to a hundred; that basically should not miss.'”

Increasing player understanding of probability just doesn’t work. So, if Muhammad will not come to the Mountain, we must drag our statistical outcomes to the player’s understanding.

Lies, Damned Lies, and Statistics

Solomon’s response to the emotional experience of missing a “basically should not miss” shot in XCOM 2 was to tweak some percentages in the player’s favour: If you miss a shot, your next high percentage shot gets a secret +10% to hit. If you miss that one, it stacks another 10%, and on it goes. The goal of a system like this is to remove repetition of failure, even when a normal distribution of results would generate repeats (in the same way flipping a coin 1000 times could run tails seven times in a row) because (say it with me) players are terrible with probability. Especially with a series, or runs, especially if those runs are of bad luck. Solomon’s goal is to preserve player’s engagement (faith in the model) but ensuring that normal, expected, regular results feel like “bad luck”.

This kind of lie, epitomised by pity timers, secret bonuses, or selective randomness has become an industry-standard in a way that I support. It creates a better play experience for the player, a stronger Magic Circle, and allows players to make “more informed” decisions (because the outcomes are closer to their heuristics). But none of that was helpful for me, because I was making tabletop exercises for Air Rank commanders.

Playing With an Open Hand

Tabletop is a bitch. Especially when playing with people who want to see the sausage get made, from start to finish. One solution, one lie, that has become supported in Tabletop play is for the GM to adjudicate when failure is “wrong” by lying about dice results (“fudging dice”). But it, like XCOM’s lies, needs to be a secret to preserve Faith in the Model. Unfortunately, fudging dice has become such an open secret that simply hiding dice results can ruin Faith in the Model: “If the model worked,” says our pretend player, “you’d roll out in the open so we could see.” That sausage-watching desire was a defining element of presenting strategic games to star officers in the Air Force: Every resolution would have to be done out in the open, with a defensible methodology, and repeatable results.

Thankfully, I found a solution that worked. A similar solution to what Sid Meier found: Identify the breakpoints.

Breakpoints of Conceptual Probability

The first breakpoint is at Certainty. If a player is SURE they will succeed, or sure they will fail, it makes no sense to engage with a randomiser, it can only disrupt the player’s understanding. Notably, XCOM 2 made the decision to cap hit chance at 95% (there’s always a chance you can fail), similarly, early d100 Tabletop RPGs like Dark Heresy (2008, Fantasy Flight Games) reserve five results at the end of the results list for "failure no matter how high your skill" (which was carried over from earlier Warhammer Fantasy Role Play [2nd edition, 2005, Black Industries] systems). I understand the desire to avoid certainty and maintain some degree of unpredictability, but I truly do not believe it to be worth the cost. If players can generate certainty in uninteresting ways the problem is not with certainty, but with your generation methods. Certainty is a valuable state to identify. Locking the max at 95% and pretending that isn’t certain does not work, as we’ve discussed, players see it as certain well before we arrive there.

I mentioned in the twitter thread that spawned this ludosophy that getting players to commit to “Certain” was incredibly important from a pilot development perspective. Some things in air combat are guesses, that’s the reality. They’re best guesses, formed by exceptional intelligence, a lot of math, and high-fidelity testing, but, in the end, when a pilot puts out flares to defeat an infra-red missile, it’s a guess. Having a system that didn’t allow players to “cheat” certainty by saying things like “oh it’s almost certain, so we’ll just say it works” was critical to developing the “Plan B” approach to air combat tactics in my junior pilots. What happens if, when you hit that radar-defeating notch, it doesn’t work? Because that’s a reality of pressing into a dogfight, you might lose someone on the way in. Not every time, maybe not most times. 99.9999% of the time you’re good to go, but as a flight-lead fighter pilot, I need you to think about your plan if your wingman never turns back in. Having a pilot stand in a room full of low-fidelity simulation and engaged colleagues and say, “Kinematic defeat is certain. Radar defeat isn’t” made better pilots.

So I created a 3-tier system: Certain success, certain failure, 50/50. And…it worked. Humans, as bad as we are with probability, can handle 50/50. And this works perfectly for non-experts. Expertise brings a higher capacity for fidelity. That’s one of the things that we’ve not yet discussed! Why is it that the designer finds conceptual probability easier to understand than the player? Expertise. Time at the coal-face. Perspective. But expert players have that perspective.

“The object of [a strategic/serious matrix game] is to generate a credible narrative from which we hope to gain insights into the situation […] In some technical fields, like Cyber, it can be advantageous to have an expert panel to decide on the success of an argument or the success probability, provided they can fully articulate the reasons why and generate reasons for failure.” The Matrix Games Handbook: Professional Application from Education to Analysis and Wargaming (Curry, Engle, Perla, 2018)

Working with experts, I found that I could split 50/50 down the line again into a five-tiered system: 75% and 25%, reliant solely on the player’s capacity to conceptualise the nuances at play, but that’s only as far as I could go, no matter how expert the players. I experimented with 30%-60%-90%, and found it to provide a worse player outcome than 25-50-75. 30% and 60% were approached the same as 25% and 50% by players (but were harder to resolve than a coin flip or two), but 90% was the killer. At 90%, players switch into that “almost certain” mode, and every 10% failure ripped the guts out of my model and disengaged the player. To reassert, in the many games that I tried >=85% success rates, there was never a failure where a player did not immediately start to disengage from the model. We’re talking top gun, A- and B-category fighter pilots. Commanding officers of Squadrons and Wings. Taking them past 75% was a fool’s errand. Now, not all of these disengagements were show-stoppers. A good game is robust to a little distance. But it was always shaky ground. The worst feedback I received on the legitimacy of the outcomes gathered during my games was from players that had failed two 85%+ rolls within one game. Not even in a row, just within a game!

A benefit to the 0-50-100 method I’m not seeing discussed elsewhere is how much it pushes players to generate certainty. This loops back to my earlier comment about XCOM 2 (“If players can generate certainty in uninteresting ways the problem is not with certainty, but with your generation methods.”). I love helping push a pilot toward certainty, knowing they’re afraid to take a coin-flip on a dogfight. I love watching them scratch for every inch of advantage:

“I take a mid-range missile shot.”

”Copy, Sir. Certain kill?”

”Negative. Uhhhh, that’s going to go to the coin flip isn’t it. Okay. Before I shoot, I’ll climb to 40,000 feet, and get radar look-down.”

”That’s burning a LOT of energy and a LOT of fuel to get that position, but I love it. Is a mid-range look down shot certain, Sir?”

”Neg. I’m going to need more. Okay, let’s go for a single-target radar mode, and I’ll hand off the other enemy to my wingman.”

”Okay, so you’ve burned a bunch of gas and are risk an untargetted. Big spend. Certain?”

”Christ. No, I could have a terminal missile failure. Let’s double tap.”

”Okay, Sir, let me get this straight. You’re carrying 8,000lbs of fuel, and you’re going to burn a massive chunk of that going high-and-fast, and you’re carrying 4 missiles now, and you’re going to burn HALF your stock to confirm this kill.”

”Affirm! I’m not going to a dogfight 1:1 here. Mark it certain.”

”Aye, Sir. Certain kill on hostile 21.”

Because the generation method is engaging, interesting, and creates dynamic consequences, we’ve built a great conflict! Now my pilot will have to manage his fuel on the next engagement (he can’t do that High-Fast thing every time and still meet his time targets), plus he’s down half his missiles. Where Solomon (Firaxis) would say we're removing a dynamic layer, my significant experience show me that it’s more about changing focus than stripping out depth. Notably, Solomon’s latest title Marvel’s Midnight Suns (2022) has removed hit chance completely, instead bringing a card-draw system that front-loads probability.

Conclusion

The lessons have been learned through a hard fight with players, probability, and the messy human brain.

A player’s faith in your systems is integral to their engagement with your experiences.

Players don’t conceptualise probability “by the numbers”.

You will have to manipulate your outcomes to align them with your players expectations, and there are better or worse ways to do that

>=80% might as well be 100%

<=20% might as well be 0%

Certainty is a tool

If 100% or 0% is boring, it’s because your methods of generating certainty don’t ask for enough interesting tradeoffs, or give enough interesting benefits to hold back.

Design Challenge

This one is kind of orthogonal to a lot of what we’ve spoken about, but I think it’s the most interesting one.

Take a game that has a percentage hit chance, a post-choice randomisation, or something along these lines. If you’re stuck, use Dungeons and Dragons 5e free basic rules if you’re familiar (start at page 75 for “Making an Attack”) and ask yourself how you could build certainty into the system without sacrificing dynamic responsiveness. List:

What changes to the play experience?

Are decisions made in the same place?

What skills are the players now asked to show?

Does the game feel harder? or easier? What does that mean to you?

What is the first thing you want to discover in a playtest?